Historical background of managed services misconfigurations

When cloud providers launched early managed storage like S3 and later Azure Blob, the main promise was to hide infrastructure complexity behind simple APIs. That convenience came with a subtle trap: security and governance knobs also moved into the console, where a single checkbox could expose terabytes of data. The story of serviços gerenciados aws s3 erros de configuração is essentially a story of defaults: initially permissive ACLs, public buckets for quick prototyping, weak monitoring. As adoption grew, breaches caused by misconfigured storage and RDS instances pushed vendors to harden defaults, add wizards and security scorecards, yet human error and rushed deployments remain the dominant root cause.

Core principles for safe configuration

Despite the variety of platforms, the same basic principles apply to almost every managed service. First, treat identity and access management as code, not as a manual ritual in the console. Second, start from least privilege and add only what you can justify in writing. Third, isolate environments and networks so that a single mistake does not turn into a systemic leak. When you think about como configurar s3 e blob storage com segurança, add one more rule: assume that “public” will sooner or later be discovered and indexed. Finally, back every change with logging, alerts and periodic reviews so drift is detected early instead of after an incident.

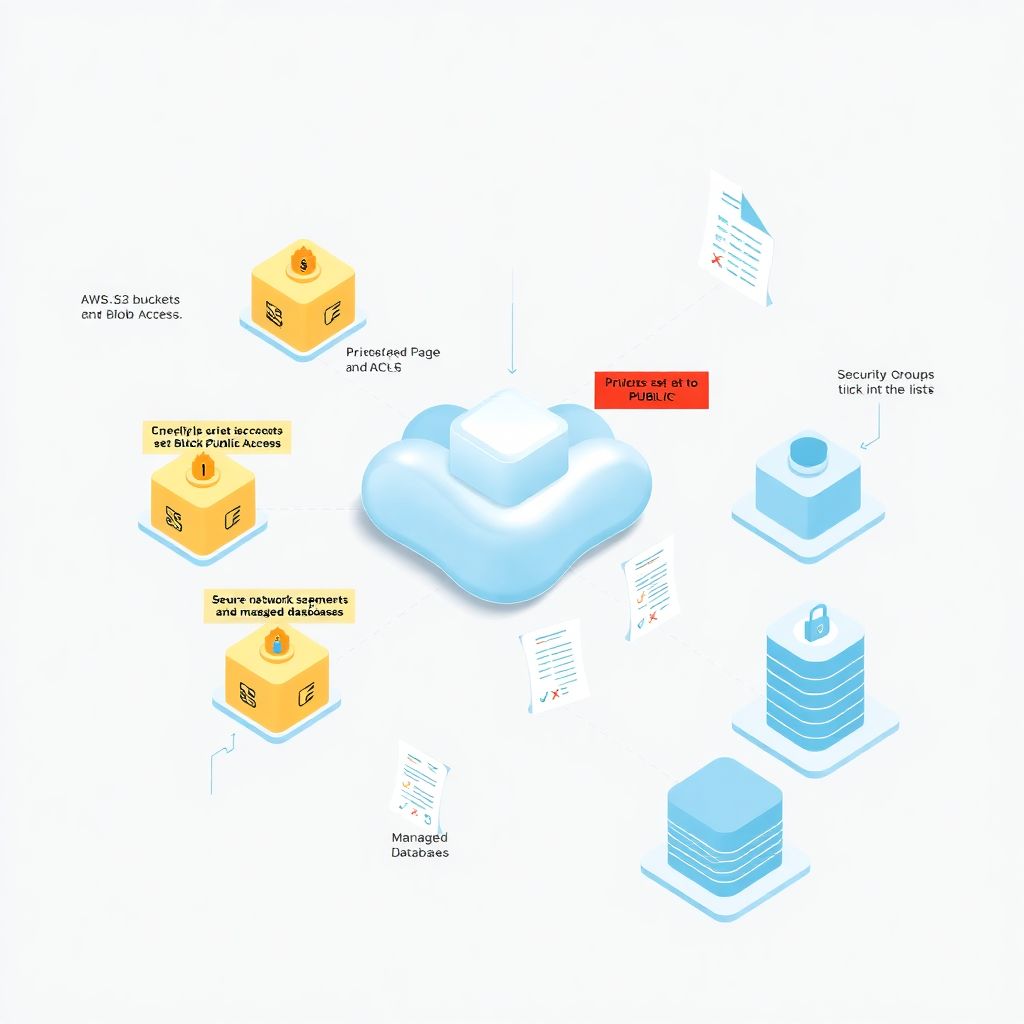

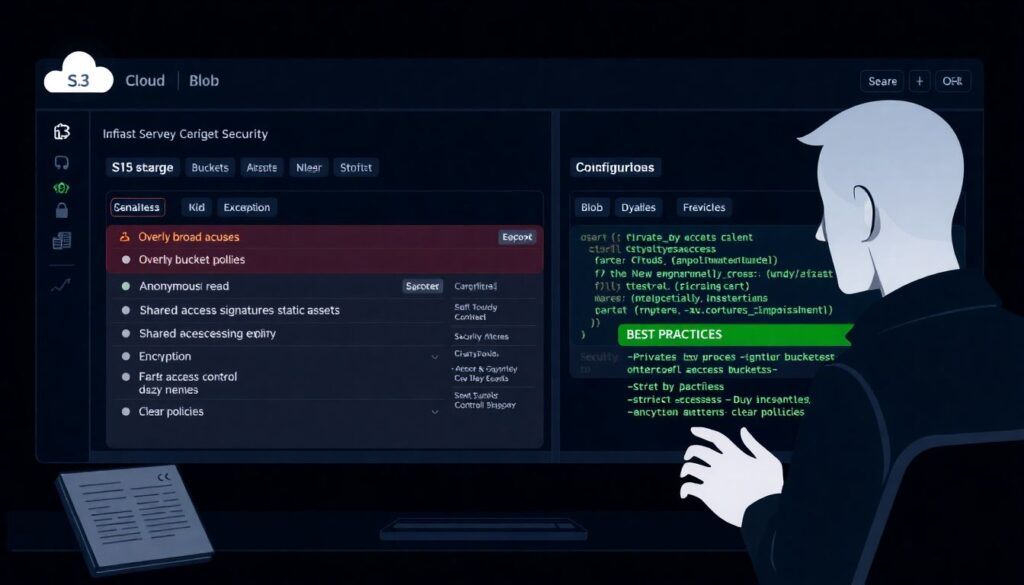

Concrete examples in storage (S3 / Blob)

In object storage, misconfigurations tend to be brutally simple. Overly broad bucket policies, anonymous read on “static” assets, or shared access signatures without expiry still power many leaks. A careful engineer focuses on melhores práticas segurança storage na nuvem s3 blob: private by default, block public access at the account level, and route exposure through hardened endpoints such as CDNs or application gateways. Encryption keys should be managed centrally, with rotation and clear ownership. Lifecycle rules and versioning help limit blast radius of accidental deletions. The key is to design a data classification model first, then map each class to specific controls, instead of flipping checkboxes ad hoc.

Database layer: RDS and other managed engines

With databases, the pattern changes: data is rarely public, but lateral movement and credential sprawl are common. Misconfiguring RDS security groups with 0.0.0.0/0, reusing admin passwords across environments, or disabling minor version upgrades are all classic pitfalls. Boas práticas configuração rds banco de dados gerenciado start with network isolation, restricted inbound sources, and mandatory TLS. Add automated backups, multi‑AZ or zone‑redundant deployment, and strict parameter baselines for logging and audit. Managed services can enforce encryption at rest and in transit; skipping those options today is hard to justify. Finally, integrate RDS auth with central identity providers so you can revoke access without touching every instance.

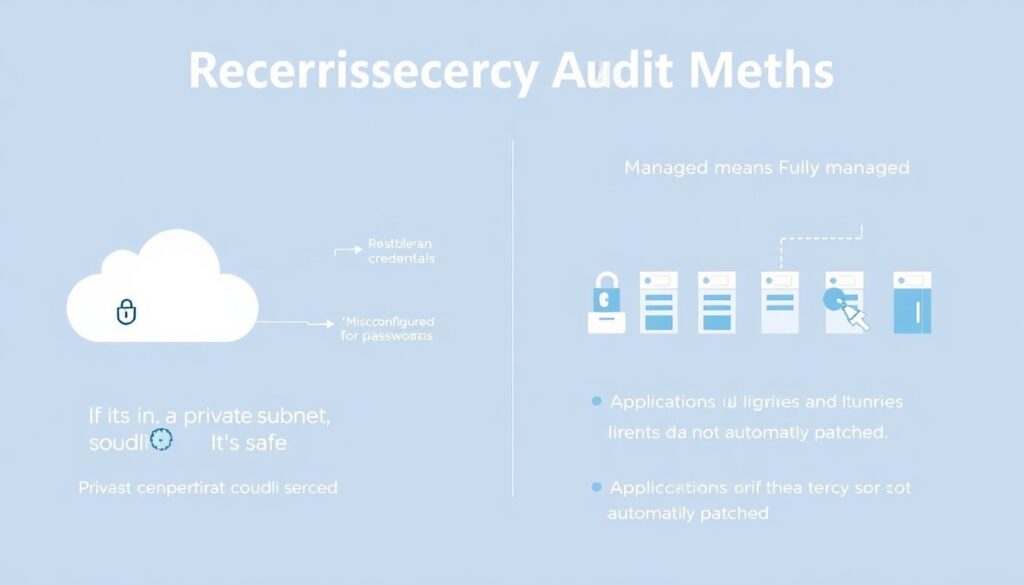

Typical misconceptions and hidden traps

Several myths keep reappearing in audits. “If it’s in a private subnet, it’s safe” ignores compromised credentials or misrouted VPNs. “Managed means fully managed” leads teams to assume that providers patch everything, including application engines and libraries, which is untrue. Another misconception is that storage misconfigurations only happen through consoles; in reality, misaligned IaC templates can replicate flawed patterns across dozens of accounts. Even experienced teams underestimate data exposure via logs, backups and test buckets. This is where consultoria configuração segura serviços gerenciados aws azure often adds value: by mapping real data flows, not just resource lists, and showing how small gaps combine into systemic risk.

Practical step‑by‑step hardening approach

To make this concrete, it helps to adopt a repeatable sequence rather than sporadic “security days”. A lean but effective workflow can look like this:

1. Inventory all managed services and classify data sensitivity per resource.

2. Lock down network exposure, removing public endpoints where feasible and enforcing private access paths.

3. Review IAM policies and keys, eliminating wildcards and stale accounts.

4. Standardize encryption, backups and retention using shared modules or IaC templates.

5. Enable logging, alerts and periodic compliance checks, then treat deviations as operational incidents. Applied consistently, this turns configuration from a one‑off task into a continuous control loop.

Future outlook: configuration by 2030

By 2026 we already see strong movement toward “secure‑by‑construction” platforms. Policy‑as‑code, AI‑assisted reviews and default‑deny public access are becoming normal. Over the next few years, expect managed services to ship with opinionated blueprints: you will choose a data classification, and the platform will auto‑generate policies, networks and keys accordingly. Misconfigurations will not disappear, but they will shift toward custom glue code and multi‑cloud integrations. Vendors will likely expose richer guardrails APIs, while auditors focus more on intent than on raw settings. Teams that internalize melhores práticas segurança storage na nuvem s3 blob today will be better positioned to evaluate and fine‑tune those evolving guardrails instead of fighting their constraints.