Why security logging in cloud‑native suddenly matters so much

If you’re running anything serious on Kubernetes or serverless in 2026, security logging and observability are no longer “nice to have”; they’re what keeps you from learning about a breach on social media. Cloud‑native stacks spread your workload across clusters, regions and providers, which means traditional syslog‑only thinking breaks fast. The same request can cross dozens of pods, services and managed components, and without a coherent view of logs, metrics and traces, incident response turns into guesswork. The goal now is not just storing logs, but turning them into a real‑time security sensor for your entire platform.

How we got here: from log files to full‑stack observability

Back in the early 2000s, “logging” meant rotating text files on a few VMs and maybe shipping them to a SIEM. As virtual machines multiplied, ops teams added collectd, agents and clunky dashboards, but the security story stayed reactive. The shift to containers and microservices around 2014 broke that model; suddenly you had thousands of short‑lived instances dumping logs to ephemeral filesystems. That pain gave rise to ferramentas de observabilidade em ambientes cloud native, unifying logs, metrics and traces so teams could actually follow a request through service meshes, queues and managed databases while preserving security context and user identity along the way.

From monitoring to observability: what changes for security

Traditional monitoring asked, “Is my server OK?” Observability asks, “Why is this specific request misbehaving right now?” For security, that shift is huge. You’re not just counting 401 errors; you’re tying them to traces, identities and configuration drift. Modern soluções de segurança e observabilidade para microsserviços let you correlate an odd JWT claim with a spike in outbound traffic and a suspicious config change in Git. Instead of combing through gigabytes of logs after an alert, you pivot across signals: pod labels, namespace, zero‑trust policies, even eBPF‑captured syscalls, all inside one narrative of the incident.

Centralized vs distributed logging: comparing core approaches

When people ask como implementar logging centralizado e observabilidade em cloud, they usually weigh two options. The first is classic centralized logging: ship everything to a managed backend such as OpenSearch, Loki‑compatible services or cloud‑native logging platforms, then query from there. The second is a more distributed model: keep data closer to the workload, aggregate summaries and only forward what’s important. Centralization simplifies correlation and threat hunting, but risks runaway costs and noisy data. Distributed designs reduce volume and latency, yet demand stricter schema discipline and better up‑front decisions about what security context you keep or discard.

Pros and cons of the main logging stacks

The “EFK”‑style stack (Fluent/Fluent Bit plus Elasticsearch/OpenSearch and Kibana) still shows up everywhere because it’s flexible and has rich query power. Its downside is operational overhead and cost at scale, especially when every pod logs verbosely. Loki‑based systems are cheaper for huge volumes but require adapting to label‑centric thinking and may feel limiting for complex threat‑hunting queries. Proprietary cloud services integrate smoothly with identity and access management, yet can lock you in and make multi‑cloud visibility painful. The best choice balances query depth, price predictability, and how much operational burden your team can handle day to day.

Choosing platforms of monitoring and observability for Kubernetes

As clusters grow, ad‑hoc dashboards break quickly, so equipes buscam plataformas de monitoramento e observabilidade para kubernetes que unificam nós, pods, ingress, service mesh e managed add‑ons. OpenTelemetry has become the baseline for instrumenting logs, metrics and traces in one consistent way, while tools like Prometheus, Tempo and modern security‑aware UIs overlay policy violations and runtime anomalies. The big win is seeing Kubernetes events, audit logs and network flows alongside application traces. The trade‑off is complexity: wiring all of this correctly, with tenant isolation and RBAC, takes more upfront design than people expect, especially in regulated environments.

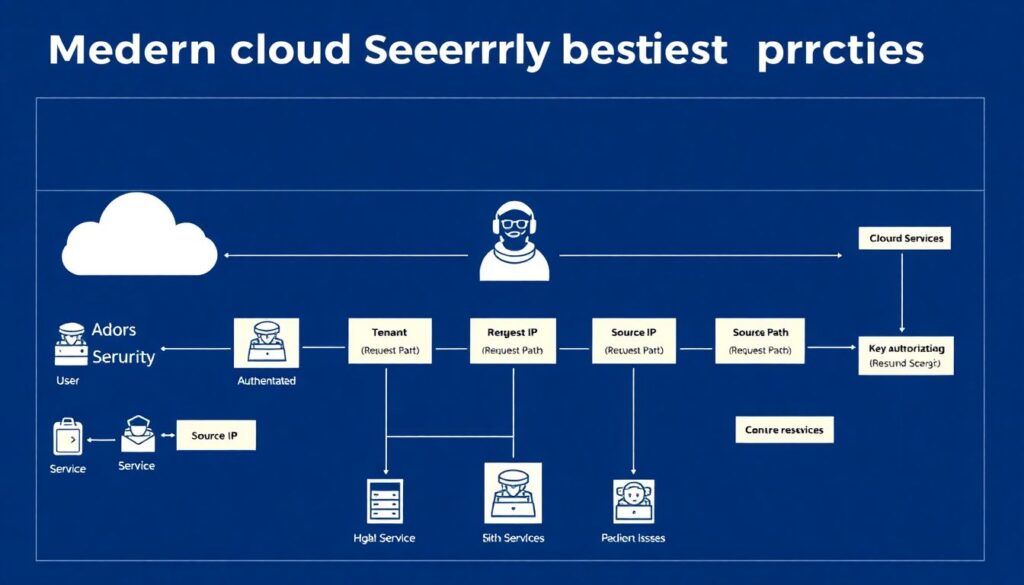

Security‑first logging practices that actually work

The melhores práticas de logging de segurança em nuvem começam antes вы включите любой агент. Define a minimum security schema that every service must log: authenticated user or workload identity, tenant, source IP, request path, key decisions from authorization, and the resource touched. Then standardize log formats, ideally JSON with consistent fields, so your SIEM and detection rules don’t depend on fragile parsing. Finally, align retention policies with the real threat model: short for debug logs, long for authentication, admin actions and data access. Security observability is less about log volume and more about reliable, queryable context.

Network, identity and runtime: three angles of visibility

Good logging in cloud‑native security means treating the network, identity and runtime as three complementary lenses. Network observability should show you which pod talked to which service and why the policy engine allowed it. Identity logs must connect every request to a workload, user, or machine identity, not just an IP or token string. Runtime observability—often powered by eBPF in 2026—adds low‑level visibility into process behavior, file access and kernel calls. When these angles converge, you can spot lateral movement, credential misuse or supply‑chain tampering instead of only catching blunt denial‑of‑service attacks.

Designing observability for microservices without drowning in data

With microservices, every team wants to log “just in case,” which explodes storage and noise. Modern soluções de segurança e observabilidade для microsserviços lean on sampling, dynamic log levels and domain‑driven schemas. A practical pattern is: high‑fidelity logs for auth, payments, admin actions and data exports; sampled logs elsewhere. Traces link it all, so you can reconstruct a user journey without logging every variable. The trick is teaching teams to treat log fields as part of the API: backward‑compatible, versioned and privacy‑aware, so you don’t leak secrets or personal data into long‑lived archives by accident.

Teams and responsibilities: who owns what

By 2026, the most successful companies treat observability as a product, not a side job. Platform teams offer paved roads: default collectors, dashboards and security rules; application squads adopt them and add domain‑specific signals. Security engineers contribute detection logic and incident‑response automations, while compliance folks define retention and access controls. When everyone logs creatively without shared governance, your SIEM becomes a junk drawer. Clear ownership boundaries—who defines schemas, who approves new data sources, who can query sensitive logs—are as important as any tool choice in keeping your environment both transparent and compliant.

How to pick tools and architecture in 2026

Choosing ferramentas de observabilidade em ambientes cloud native today starts with two questions: how many clusters and regions you expect, and how regulated your data is. For small teams, managed observability services plus lightweight OpenTelemetry collectors often beat self‑hosting any day. As you scale, hybrid designs emerge: regional collectors, local short‑term storage, and a central security data lake for long‑term analytics. Prioritize systems that support open standards and export to multiple backends; that way, if cost or compliance pushes you elsewhere, you’re not rewriting every dashboard and alert. Avoid “agent sprawl” by standardizing early.

New trends and what’s emerging by 2026

In 2026, observability is colliding with detection and response. Vendors now ship behavior analytics on top of your traces, spotting anomalous call graphs or impossible travel patterns for service accounts. eBPF‑powered sensors make it easier to monitor managed Kubernetes and serverless without invasive agents. There’s also a push toward privacy‑preserving logging: on‑the‑fly redaction, tokenization and field‑level encryption baked into collectors. Another trend is graph‑based views, where you explore incidents as relationships among services, identities and policies instead of raw line logs, making it far easier for responders to navigate complex, multi‑cloud environments quickly.

Putting it all together: a practical path forward

To move from ad‑hoc monitoring to real observability, start small but intentional. Pick one critical flow—say, user login to core transaction—and trace it end to end across logs, metrics and traces. Make sure you can answer “who did what, from where, and was it allowed?” in a few queries. Then scale that pattern to other flows, refine retention and build a central security workbook for responders. Over time, your question shifts from “Why is this broken?” to “Why did the system allow this?”—which is exactly where mature cloud‑native security observability is supposed to take you.