Por que erros de configuração continuam explodindo na segurança cloud

Over the last three years, cloud breaches caused by bad configuration have stopped being an exception and become the norm. Verizon’s Data Breach Investigations Reports from 2022 to 2024 consistently show configuration mistakes and other “error” actions as one of the top causes of cloud data exposure, bouncing roughly in the 10–20% range of analyzed breaches depending on sector and technology stack. At the same time, multiple cloud threat reports from players like Wiz, Orca and Palo Alto Networks keep repeating the same uncomfortable pattern: most organizations have publicly exposed storage buckets, overly permissive identities or forgotten internet‑facing services. So if your team still treats “we’ll fix security later” as a strategy, statistically you are just joining that majority of companies walking around with misconfigured doors wide open in the cloud.

Defining the essentials: what “cloud security configuration” actually means

Before diving into mistakes, it helps to align on terminology. When we talk about “cloud security configuration”, we mean all the knobs and switches that control who can access what, from where, and under which conditions in your cloud environment. That includes identity and access management (IAM) policies, network rules like security groups and firewalls, encryption options for data at rest and in transit, logging policies, as well as service‑specific settings for databases, storage, containers and serverless functions. A cloud misconfiguration is simply a configuration that technically works but violates security expectations or segurança em nuvem melhores práticas, such as leaving an S3 bucket public or a production database reachable from the internet with default credentials. The dangerous part is that most misconfigurations do not look like failures to application teams, because things keep “working” until an attacker shows up.

[Diagram: Imagine three nested rings. The inner ring is “Cloud Resources” (VMs, buckets, DBs). The middle ring is “Security Configuration” (IAM, network, encryption, logging). The outer ring is “Business Context” (compliance, data sensitivity, user locations). Misconfigurations occur when the middle ring is set without properly aligning with the outer ring, leaving gaps around specific resources in the inner ring.]

Statistic snapshot 2022–2025: how bad is it?

From 2022 to 2025, trend data has been painfully consistent. Cloud security vendors scanning millions of resources report that over 70% of organizations have at least one critical misconfiguration exposed to the internet at any given time. Several 2023 and 2024 cloud threat reports put misconfigurations behind a majority of cloud‑native incidents they investigated, often above 60% when you filter down to storage leakage, exposed management interfaces and overly permissive IAM roles. IBM’s Cost of a Data Breach studies in 2022, 2023 and 2024 also highlight that breaches involving cloud environments tend to take longer to detect than pure on‑prem cases, often stretching beyond 250 days, which means a simple mistake in an access policy might quietly sit there for most of a year. Looking forward, research published in early 2025 suggests that as more critical workloads move to multi‑cloud and container platforms, configuration complexity will keep rising faster than most teams can manually keep up.

Erro #1: Dados de armazenamento públicos sem querer

Cloud storage services make it insanely easy to share files with the world, sometimes a bit too easy. A storage misconfiguration happens when a bucket, blob container or object store that should be private ends up readable (or even writable) from the public internet. That can happen via “public read” flags, inherited policies, temporary anonymous links that never get revoked, or misused CDN and static website options. Modern breach catalogs are filled with incidents where companies leaked millions of customer records just because a logging bucket was left open. In the last three years, exposed storage remains one of the most common cloud issues flagged by automated scanners, frequently affecting over half of the tenants analyzed by some providers. Unlike a sophisticated exploit, attackers simply crawl for these open endpoints using search engines and specialized tools, no zero‑day required.

[Diagram: Think of a bucket icon inside a VPC cloud shape. Normally, arrows come only from the “App Servers” box. In a misconfiguration, a big arrow labeled “Public Internet” also points directly to the bucket, bypassing the app. The risk zone is that direct public arrow.]

How to avoid public storage disasters

The fix requires both guardrails and habits. First, treat private as the default: turn on account‑level blocks for public access where supported (AWS Block Public Access, Azure public access disablement, GCP uniform bucket‑level access). Then configure explicit exceptions only for buckets that are meant to be public, like static websites, and document those decisions. Second, integrate continuous scanning through ferramentas proteção segurança na nuvem that alert you whenever new public buckets or containers appear. Over the past three years, companies with automated checks wired into CI/CD pipelines show drastically fewer long‑lived storage exposures compared with those relying on manual reviews only. For high‑sensitivity data, mandate encryption with KMS keys and private access paths (such as VPC endpoints), so even if a setting briefly flips, the blast radius stays limited.

Erro #2: IAM “allow *” e identidades com superpoderes

Identity and Access Management is supposed to implement least privilege but, under pressure to “just make it work”, teams often fall back to wildcards and admin roles. A privilege misconfiguration appears when a user, service account or role gets much broader permissions than necessary, often via policies like `”Action”: “*”` or attaching built‑in administrator roles to automation scripts. Cloud incident data across 2022–2025 keeps showing that once attackers gain any foothold (phishing, leaked key, compromised build server), they move laterally by abusing these powerful identities instead of looking for obscure vulnerabilities. That’s why misconfigured IAM is repeatedly cited as a key factor in multi‑stage breaches: it turns a minor incident into a full‑blown compromise. Compared to on‑prem AD, cloud IAM is more granular but also more complex, and the sheer number of services and actions makes accidental over‑granting extremely common.

[Diagram: There is a “Developer Role” box connected to icons for S3, RDS, Lambda, IAM, KMS, and Billing. In a secure case, only a few arrows exist. In the misconfigured case, every icon is lit and all arrows are present, illustrating over‑permission.]

Making IAM survivable in real life

Start with roles and groups based on job functions, not individual snowflake policies. Use managed policies from providers as reference but trim them down. For machine identities, always prefer short‑lived tokens or instance roles over static access keys; statistics from several 2023–2024 incident reports note that leaked long‑lived keys remain a frequent initial entry vector in cloud breaches. Combine IAM Access Analyzer‑style tools with serviços gestão de segurança em nuvem to continuously detect excessive permissions and unused privileges. Over time, enforce permission boundaries or custom roles with narrow scopes, then enable just‑in‑time elevation for rare admin tasks. This may feel heavier than the classic “give devs admin and trust them”, but history from the last few years shows that human mistakes and compromised laptops are inevitable, so identities must be designed to fail small.

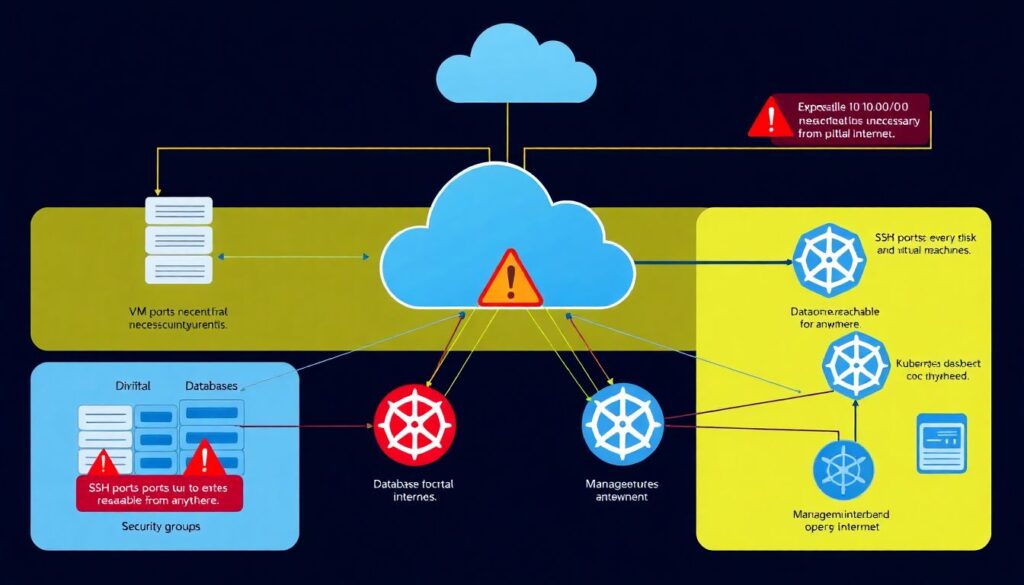

Erro #3: Portas abertas na rede e superfícies de ataque gigantes

Network misconfigurations happen when security groups, firewalls or load balancers expose unnecessary ports or entire subnets to the internet. Examples include SSH open to 0.0.0.0/0 on every VM, database ports reachable from anywhere, or management interfaces like Kubernetes dashboards exposed without proper authentication. Industry telemetry across 2022–2025 repeatedly shows millions of cloud IPs with RDP, SSH or database endpoints listening directly on the internet, many with weak or default credentials. Attackers don’t even have to scan from scratch; services like Shodan and Censys index these exposures in near‑real time. Compared to traditional data centers, where network changes often go through change control, cloud teams can open ports with a few clicks or lines of Terraform, meaning misconfigurations propagate faster and at larger scale, especially in multi‑account and multi‑subscription enterprises.

[Diagram: Picture three tiers: “Internet”, “Public Subnet”, and “Private Subnet”. In the safe design, only a load balancer in the public subnet talks to app servers in the private one. In the misconfigured version, several databases and admin ports in the private subnet have direct arrows from the Internet layer.]

Reducing network exposure without killing agility

A practical approach is to invert the default: instead of deciding what to block, decide what absolutely must be public and keep everything else private. Use private subnets, VPN or Zero Trust access brokers for admins, and restrict management ports to specific jump hosts or identity‑aware proxies. Operationally, treat security groups and firewall rules as code, reviewed in pull requests, so bad patterns like `0.0.0.0/0` on sensitive ports trigger discussions before deployment. Over the past three years, organizations that deployed network‑level micro‑segmentation combined with continuous attack surface monitoring showed clear reductions in exposed services statistics in independent security assessments. If your team struggles with this complexity, bringing in consultoria segurança cloud para empresas for a focused redesign of VPCs, VNets and peerings often pays off quickly, especially for regulated industries under constant audit pressure.

Erro #4: Logs, monitoramento e alertas desligados (ou ignorados)

Another classic misconfiguration is simply not logging enough, or logging but throwing the data away. A visibility misconfiguration arises when critical services like databases, API gateways or identity providers run without audit logs, or when logs never reach a centralized SIEM or cloud logging service. Breach reports between 2022 and 2025 repeatedly mention that many victims had no useful logs for the early stages of attacks, forcing investigators to rely on external sources or guesswork. That gaps not only forensic analysis but also compliance with regulations that expect traceability of access to personal data. Compared to on‑prem, cloud platforms provide extremely rich telemetry, but it must be explicitly enabled, configured and retained with sane policies; misconfiguring retention to only a few days or not indexing relevant fields is nearly as bad as not logging at all.

[Diagram: A row of service icons (Web App, DB, IAM, API GW) each sending arrows to a central “Log Lake / SIEM” box. In the misconfigured scenario, some arrows are missing or end in small local disks, indicating logs are not centralized or retained.]

Turning logging from checkbox to defense mechanism

Start by enabling platform‑native audit features for identities, networks and data stores, then forward them into a central pipeline with long‑enough retention aligned to your regulatory obligations. Over the last few years, teams that wired cloud logs into detection rules for common misconfigurations (for example, alerts on newly public buckets or sudden creation of powerful IAM roles) have been able to catch mistakes within hours instead of months. When you engage serviços gestão de segurança em nuvem or MDR providers, ensure they truly understand cloud log formats and not just traditional syslog; otherwise, you are paying for eyes that can’t read half your environment. Finally, practice incident response using these logs at least annually so gaps in coverage, retention or parsing are discovered in an exercise, not during a real breach under legal and media pressure.

Erro #5: Falta de criptografia adequada e gestão de chaves desleixada

Encryption misconfigurations appear when sensitive data is stored or transmitted without strong encryption, or when keys are managed carelessly. Common patterns include leaving storage unencrypted, accepting outdated TLS versions, or sharing KMS keys across unrelated applications. From 2022 to 2025, most major cloud providers have pushed default encryption at rest, but misconfigurations still crop up via legacy services, self‑managed databases or lift‑and‑shift workloads. Several recent public incidents show that even when disks were encrypted, attackers who compromised IAM roles with KMS access could decrypt everything, turning “encrypted” into a false sense of security. Compared with classic on‑prem HSM setups, cloud key management offers flexible APIs but also increases the risk that developers programmatically over‑expose keys to microservices that don’t actually need them, widening the blast radius of any compromised component.

[Diagram: A “Data Store” icon with a lock symbol, and next to it a “KMS Keys” box. In a good design, only a small set of app components connect to the keys. In a bad one, many services and users all have arrows to the KMS box, illustrating over‑exposed keys.]

Keeping encryption helpful instead of cosmetic

Treat encryption options as non‑negotiable for any service that handles customer data, intellectual property or credentials. Enforce policies that block deployments of unencrypted volumes or storage, and use service control policies or guardrails to prevent disabling encryption without a documented exception. Centralize key management with clear separation of duties: security or platform teams manage KMS policies, while application teams use keys via least‑privilege grants. Over the last three years, organizations that introduced regular key rotation, strong access reviews and hardware‑backed root keys showed significantly better readiness in independent cloud readiness assessments. If uncertainty remains about proper design across providers, an external auditoria de segurança cloud AWS Azure or GCP can map where keys live, who can use them and whether any cross‑environment shortcuts were taken that might undermine compliance frameworks like GDPR or PCI DSS.

Erro #6: Configurações inconsistentes em ambientes multi‑cloud

As more companies adopt multiple providers, a unique class of misconfiguration appears: inconsistency. The same application might be locked down tightly in one cloud and nearly open in another because different teams, tools or naming conventions were used. From 2022–2025, survey data shows that organizations running multi‑cloud tend to have a higher volume of misconfigurations per workload, not necessarily because any provider is less secure, but because policy drift and duplicated effort create blind spots. IAM concepts differ, network constructs have subtle variations, and security baselines written originally for a single platform often don’t translate cleanly. In that sense, multi‑cloud is like managing fleets of very similar but not identical cars: if you copy the maintenance checklist blindly, you either miss critical steps or overcomplicate the process, and both outcomes translate into higher risk.

[Diagram: Two clouds side by side, labeled “Cloud A” and “Cloud B”, each containing icons for IAM, Network, Storage and Logging. In Cloud A, everything is green and consistent; in Cloud B, several icons are red with small warning symbols, illustrating drift between environments.]

Bringing order to multi‑cloud chaos

The core strategy is to define security requirements in provider‑neutral language (for example, “all external endpoints require TLS 1.2+ and WAF protection”) and then implement them via code for each platform, keeping that code in shared repositories. Use policy‑as‑code engines and CSPM tools that understand multiple providers, so misconfigurations are detected uniformly rather than only in your “primary” cloud. Over the past three years, mature teams have increasingly built internal “platform layers” that hide raw provider differences behind common modules, making it harder for product teams to accidentally bypass controls. Where gaps persist, targeted consultoria segurança cloud para empresas focusing specifically on multi‑cloud governance can accelerate alignment, especially when internal teams are stuck fighting day‑to‑day fires instead of designing coherent, reusable patterns for the future.

Prevenção na prática: de boas intenções a controles reais

Avoiding these errors is less about hiring mythical perfect engineers and more about designing systems where mistakes are quickly visible and cheaply reversible. Security baselines expressed as code, mandatory peer reviews for infrastructure changes and automated checks in CI/CD pipelines have proven effective across many organizations from 2022–2025, regardless of industry. Embedding cloud‑aware security champions in product teams dramatically improves the odds that configuração segura is considered early, instead of bolted on at the end. Continuous training aligned with real incidents—showing how a single “public” flag or `”Action”: “*”` line led to data exposure—tend to resonate far more than abstract policy documents. When internal capacity is stretched, using serviços gestão de segurança em nuvem to operate detection and response 24/7 buys breathing room, but ownership of architecture and risk decisions must stay with the business.

Comparing cloud security misconfigs with on‑prem realities

It can be tempting to think “this wouldn’t have happened in our old data center”, but the truth is many of the same errors existed there—open ports, weak accounts, missing logs. What has changed in the cloud era is speed and scale. On‑prem, provisioning a new database with a bad firewall rule could take days or weeks; in cloud, the same mistake can be cloned to dozens of regions in minutes through automation. At the same time, the cloud gives access to far richer native controls and sensors than most traditional infrastructures ever had, if you use them correctly. In practice, organizations that actively leverage native cloud features, combined with third‑party ferramentas proteção segurança na nuvem and periodic external auditoria de segurança cloud AWS Azure, often achieve a better security posture than legacy environments. The key difference is intentional design: cloud amplifies both discipline and sloppiness, so investing in one or the other determines which side of the statistics you end up on.

Closing thoughts: building a culture that outlives individual mistakes

Erros de configuração em segurança cloud are ultimately a human problem expressed through technical settings. Over the last three years, the data is blunt: misconfigurations are not edge cases, they are one of the primary ways organizations lose data in the cloud. The path forward is less glamorous than chasing the latest exploit, but far more impactful: solid defaults, repeatable patterns, continuous validation and a culture where reporting and fixing mistakes is encouraged rather than punished. If you combine clear definitions of what “good” looks like, rigorous automation, and occasional independent reviews from experienced cloud practitioners, the usual suspects—public buckets, over‑privileged roles, open ports, missing logs and sloppy keys—gradually move from scary unknowns to rare exceptions. That’s how cloud security stops being a lottery and becomes a manageable, measurable part of daily engineering work.