When people talk about “secure cloud-native”, they usually throw around buzzwords and move on. But when you’re actually running money‑making workloads in containers and Kubernetes, every misconfigured YAML or overly generous role can turn into a weekend‑destroying incident. In the last three years the number of cloud‑native environments in production has exploded, attacks have become more automated, and the old “trust the cluster, it’s internal” mindset just stopped working. Let’s walk through real‑world risks, non‑obvious fixes and what the market already considers the baseline for doing this safely, without drowning in theory or vendor FUD.

Why containers and Kubernetes in the cloud change the risk equation

A lot of teams still treat containers as “lightweight VMs”, but the segurança em containers e kubernetes na nuvem is a different game. You’re dealing with ephemeral workloads, shared kernels, service meshes, sidecars, managed ingress controllers and a crazy amount of automation. According to the CNCF Surveys from 2021–2023, more than 80% of respondents run Kubernetes in production, and over 60% run it in public cloud. At the same time, Palo Alto’s Unit 42 reported in 2022 that about 63% of analyzed container images had high or critical vulnerabilities; Aqua’s 2023 report showed attackers finding and exploiting exposed containers in under 5 minutes on average. So even if you patch fast, you’re playing on a shorter timeline, with a much larger attack surface than the old three‑tier VM stack.

The risk profile also shifts because your blast radius is different. Instead of one compromised server, you may have an over‑privileged service account that can list secrets across namespaces, or an insecure CI pipeline that pushes poisoned images to a shared registry. Since 2021 we’ve seen several public incidents where misconfigured Kubernetes dashboards, open etcd instances or exposed kubelet ports allowed external access straight into clusters. Many of those weren’t “0‑day” stories; they were “left the door wide open and nobody noticed” stories. That’s why cloud‑native security is less about heroics and more about getting a long list of small things consistently right and automated.

Real incidents: from exposed dashboards to cryptomining in clusters

Let’s get concrete. One of the best‑known Kubernetes breaches was Tesla’s 2018 incident, where attackers found an unsecured Kubernetes dashboard and deployed cryptominers. That case is older, but very similar patterns showed up again in Aqua and Sysdig threat reports between 2021 and 2023: exposed dashboards, anonymous docker daemons, test clusters promoted to production without basic hardening. In several newer cases, attackers didn’t even bother with lateral movement; they just spun up massive cryptomining workloads, abused the cloud provider’s compute and quietly exfiltrated credentials from environment variables. In 2022, Sysdig found that almost 75% of container‑focused attacks they observed involved cryptomining or resource hijacking, often combined with attempts to harvest cloud keys stored inside pods.

Another recurring real‑world problem is supply‑chain compromise in the build pipeline. Between 2021 and 2023, multiple reports (including from Snyk and GitHub) highlighted that a big chunk of container images in public registries contained known critical CVEs, sometimes for years. One financial‑sector case that circulated at conferences described a team that based their images on an outdated public “convenience” image that silently added a reverse shell via a malicious package. Nobody noticed for months, because the only “security check” was a one‑off manual review. Once the image was in the private registry, it looked trusted. The painful lesson: if you don’t control your base images and your registry policies, someone else might.

Key risks in cloud Kubernetes most teams still underestimate

If we condense the last three years of incident reports and red‑team talks, a few types of risks stand out. First, identity and permissions: over‑permissive RBAC and cloud IAM roles are still everywhere. Google and AWS have both shared anonymized data showing that a high percentage of workloads run with roles that can access far more resources than they actually use. In practice that means a single compromised pod can list S3 buckets, read secrets from Parameter Store, or alter other workloads. Second, network exposure: misconfigured ingress, NodePorts left open, and service meshes deployed in “allow‑all” mode are prime entry points, especially when combined with weak authentication on internal APIs.

The third under‑appreciated risk is the control plane itself. Even when you use serviços gerenciados de kubernetes seguro na nuvem like GKE, AKS or EKS, you’re still responsible for things like API server access policies, audit logging, admission controllers and secret management. From 2021 to 2023, several pentest firms published findings where they gained full cluster admin just by abusing neglected service accounts and default tokens with access to the API server over an internal endpoint. None of this looked scary in diagrams, but it turned “one compromised pod” into “complete cluster takeover” in minutes. This is where boas práticas kubernetes segurança cloud become more than buzzwords: safe defaults, strict RBAC and tight integration with the cloud provider’s IAM go a long way.

Practical best practices that actually work in production

The usual recommendations—least privilege, patch fast, scan images—are valid, but they only help if you wire them into daily workflows instead of PDF policies. A very pragmatic starting point is to treat your registry as a security control, not just storage. Enforce that every image must be scanned before it’s pulled into the cluster; many cloud registries support built‑in scanners and policy hooks. Combine that with a simple rule: production namespaces only accept images from a blessed registry path with a signed provenance. This already blocks a whole family of accidental and malicious image usage. It also pushes teams to adopt build pipelines that sign images (using tools like Cosign) and keep SBOMs, which is slowly becoming a market standard across regulated industries.

On the cluster side, turn “security hygiene” into code. That means using admission controllers or policy engines like Gatekeeper or Kyverno to encode guardrails: no privileged pods, no hostPath volumes, required resource limits, enforced namespaces and labels. In 2022–2023, companies that shared their experiences at KubeCon often highlighted that once these policy engines were in place, misconfigurations dropped dramatically, and security teams finally stopped playing whack‑a‑mole in reviews. The non‑obvious recipe is to start with audit‑only mode and collaborate with dev teams to fix the most common violations, then slowly flip policies to enforcing. If you jump straight to “block everything”, you’ll get bypasses and resentment instead of security.

Non‑obvious solutions that reduce risk without slowing teams down

One surprisingly effective and still underused tactic is runtime‑focused control with soft isolation patterns. Instead of trying to make every pod perfectly hardened, you create “lanes”: for example, workloads that process sensitive data get their own nodes, dedicated namespaces, stricter PodSecurity standards and extra logging, while less critical batch jobs run on cheaper, more general‑purpose pools. This isn’t full multi‑tenant isolation, but it shrinks the blast radius drastically. Several big tech companies have presented variants of this idea at conferences since 2021, showing that most high‑impact incidents stayed confined to a single “lane”, turning what could have been a company‑level breach into a noisy but manageable outage.

Another non‑obvious, but powerful move: bring product teams into incident simulations early. Over the last three years, organizations that ran game‑days focused on Kubernetes threats reported faster detection and response times. When developers see in practice how an over‑privileged service account can leak cloud credentials, they start to care about YAML fields they previously ignored. Tie this to metrics—time‑to‑detect, time‑to‑contain, number of pods exposed—and track them quarter over quarter. While global, consolidated statistics for 2024–2026 aren’t fully published yet, multi‑year trends up to 2023 show companies cutting their median container incident detection time by more than 30% once they combined tooling with regular hands‑on drills, rather than relying on passive dashboards alone.

Alternative architectural patterns: do you really need full Kubernetes everywhere?

A lot of teams instinctively reach for a full‑blown Kubernetes cluster even for small or isolated workloads, then struggle to secure it properly. An alternative is to lean on managed app platforms and serverless where they fit, and reserve raw Kubernetes for complex, stateful or latency‑sensitive systems. For simple HTTP APIs, managed container services or fully managed functions eliminate whole classes of cluster‑level risks: no control plane to harden, no CNI plugins to patch, fewer knobs to misconfigure. Over 2021–2023, cloud provider customer stories repeatedly showed that teams using a mix of PaaS, serverless and a small number of well‑managed clusters spent significantly less time on operational security, without sacrificing agility.

This doesn’t mean “no Kubernetes”; it means respecting the operational cost of doing it right. For internal line‑of‑business tools, a platform team might offer “golden paths”: pre‑secured namespaces or templates with built‑in network policies, PodSecurity, logging, metrics and secret management wired in. Developers deploy into those paths instead of crafting raw manifests from scratch. In highly regulated sectors, some organizations even split environments by technology: sensitive workloads in isolated clusters with hardened baselines, everything else on higher‑level managed services. That way, you align your segurança em containers e kubernetes na nuvem strategy with the actual risk and compliance requirements, instead of treating every microservice as equally critical.

Tooling and market standards: from scanners to managed services

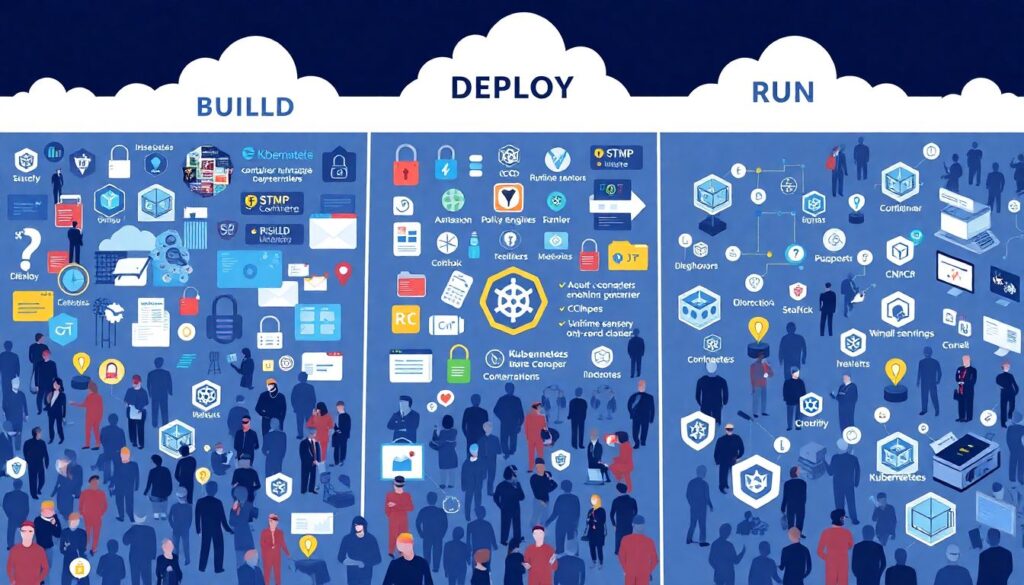

By now, ferramentas de segurança para kubernetes na nuvem form a crowded market: image scanners, runtime sensors, admission controllers, CNAPPs, CSPMs, you name it. The trick is to pick a coherent set that covers three planes: build, deploy and run. On the build side, you want image scanning and dependency analysis wired into CI with clear, agreed‑upon policies about which severities can block a release. On the deploy side, you rely on policy engines, signed images and cluster configuration scanning. At runtime, you need behavioral detection for suspicious activity, plus strong, searchable logging. Industry data from 2021–2023 shows a gradual convergence: more companies choose platform‑style suites instead of twelve separate point solutions, mostly to reduce alert fatigue and integration headaches.

Managed services have become the default starting point for many, which can be a huge security win if you understand the shared responsibility model. Using a managed control plane offloads patching and hardening of Kubernetes masters, which was a serious pain for self‑managed users a few years ago. At the same time, cloud‑native logging, managed identities and integrated secret stores make it much easier to close common gaps, as long as you actually turn them on and configure them. Providers have been publishing more prescriptive hardening guides and reference architectures, and in many RFPs today, a baseline of boas práticas kubernetes segurança cloud plus integrated security scanning is just assumed, not a competitive differentiator.

When to bring in specialists and how pros actually work day‑to‑day

If you’re feeling overwhelmed, you’re not alone; even big enterprises routinely bring in consultoria segurança kubernetes e containers to bootstrap their posture. The most useful consultants don’t just drop a 150‑page report; they sit with platform, security and product teams, map out your actual risk, then help you codify guardrails and pipelines. Over the last three years, a common pattern has emerged: start with a focused assessment of two or three key apps, fix obvious issues in CI and RBAC, roll out basic policies cluster‑wide, then iterate. The endgame is a platform where security checks are invisible most of the time because they’re baked into templates and automation, not bolted on via manual reviews.

The day‑to‑day lifehack that experienced teams share is simple but powerful: prioritize visibility first, then enforcement. Turn on API audit logs, centralize pod logs, export cluster events and wire them into a SIEM or at least an alerting system that people actually watch. Run periodic configuration and image scans even in non‑prod, and treat findings as backlog items with owners and SLAs. Once the organization can see what’s happening and how often policies would have blocked bad configurations, you get political capital to flip more switches to “enforce”. That gradual, metrics‑driven approach is how mature teams reach a strong baseline without burning out developers or convincing them that “security” just means “no”.